using-the-cluster

This is an old revision of the document!

Table of Contents

Using the Cluster

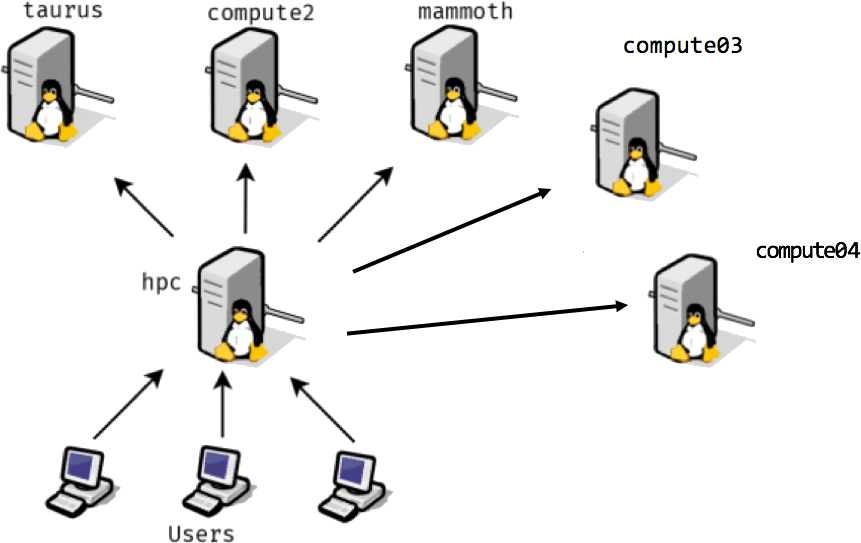

ILRI's high-performance computing "cluster" is currently composed of 4 dedicated machines:

- hpc: main login node, "master" of the cluster

- taurus, compute2, compute04: used for batch and interactive jobs like BLAST, structure, R, etc

- mammoth: used for high-memory jobs like genome assembly (mira, newbler, abyss, etc)

- compute03: fast CPUs, but few of them

To get access to the cluster you should talk to Dedan Githae (he sits in BecA). Once you have access you should read up on SLURM so you can learn how to submit jobs to the cluster.

Cluster Organization

The cluster is arranged in a master/slave configuration; users log into HPC (the master) and use it as a "jumping off point" to the rest of the cluster. Here's a diagram of the topology:

Detailed Information

Backups

At the moment we don't backup users' data in their respective home folders. We therefore advise users to have their own backups.

using-the-cluster.1530772246.txt.gz · Last modified: by aorth